Linux var log files are among the things that you should know when working on Linux systems. I don't yet know all their contents, but I can remember a few of them from earlier debugging sessions. I came across these in search of an expression to give me the largest files present on my system.

# du -ha /var | sort -n -r | head -n 10

The result from this command on my system was:

1004K /var/www/user_journeys/manual_cut_out_list.docx

996K /var/lib/dpkg/info/openjdk-7-doc.list

980K /var/lib/dkms/open-vm-tools/2011.12.20/build/vmxnet

976K /var/lib/dkms/open-vm-tools/2011.12.20/build/vmci/common

968K /var/log/auth.log.1

920K /var/lib/dkms/open-vm-tools/2011.12.20/build/vmsync

912K /var/lib/gconf/defaults/%gconf-tree.xml

884K /var/lib/dkms/virtualbox-guest/4.1.12/build/vboxsf

880K /var/lib/dpkg/info/linux-headers-3.5.0-18.list

864K /var/lib/dpkg/info/linux-headers-3.2.0-57.list

December 19, 2013

Does size matter

December 18, 2013

And I quote:

Thus, for example, read “munger(1)” as “the ‘munger’ program, which willThe Art of Unix Programming.

be documented in section 1 (user tools) of the Unix manual pages, if it’s present on your system”. Section 2 is C system calls, section 3 is C library calls, section 5 is file formats and protocols, section 8 is system administration tools. Other sections vary among Unixes but are not cited in this book. For more, type man 1 man at your Unix shell prompt.

System informaion

When working on Debian based distributions, and most likely other distribution types, you some times need to get system information, here's a bunch commands to get some info:

lsb_release -a

No LSB modules are available.

Distributor ID: LinuxMint

Description: Linux Mint 13 Maya

Release: 13

Codename: maya

uname -a

Linux SorteSlyngel 3.2.0-31-generic-pae #50-Ubuntu SMP Fri Sep 7 16:39:45 UTC 2012 i686 i686 i386 GNU/Linux

apt-cache policy aptitude

aptitude:

Installed: 0.6.6-1ubuntu1.2

Candidate: 0.6.6-1ubuntu1.2

Version table:

*** 0.6.6-1ubuntu1.2 0

500 http://archive.ubuntu.com/ubuntu/ precise-updates/main i386 Packages

100 /var/lib/dpkg/status

0.6.6-1ubuntu1 0

500 http://archive.ubuntu.com/ubuntu/ precise/main i386 Packages

apt-cache policy apt

apt:

Installed: 0.8.16~exp12ubuntu10.16

Candidate: 0.8.16~exp12ubuntu10.16

Version table:

*** 0.8.16~exp12ubuntu10.16 0

500 http://archive.ubuntu.com/ubuntu/ precise-updates/main i386 Packages

100 /var/lib/dpkg/status

0.8.16~exp12ubuntu10.10 0

500 http://security.ubuntu.com/ubuntu/ precise-security/main i386 Packages

0.8.16~exp12ubuntu10 0

500 http://archive.ubuntu.com/ubuntu/ precise/main i386 Packages

apt-cache policy python-apt

python-apt:

Installed: 0.8.3ubuntu7.1

Candidate: 0.8.3ubuntu7.1

Version table:

*** 0.8.3ubuntu7.1 0

500 http://archive.ubuntu.com/ubuntu/ precise-updates/main i386 Packages

100 /var/lib/dpkg/status

0.8.3ubuntu7 0

500 http://archive.ubuntu.com/ubuntu/ precise/main i386 Packages

dpkg -S /bin/lsdpkg -s grub-common

Short post about Debian Administrators Handbook this should be read by all Debian based systems users to understand the aptitude commands.

December 17, 2013

How many hours did you do on this or that project this year?

Yikes, its getting close to the end of the year and I just know somewhere someone is lurking in the shadows to be checking my daily hour registrations. Unfortunately, this year the tool used to post hours in is the *agile* tool redmine.

This tool is of course not compatible with the company hour registrations, so I better create a report by doing some cmdline stuff. Luckily, redmine can export timespend to a csv file this i what I did.

Once the file is ready the following cmdlines can be of assistance for analysing the timesheet data

1. Find All hours spend in 2013 and dump it to a new file

grep 2013 timelog.csv > time_2013_me.csv

2. Find all projects worked on in 2013

cut -d ';' -f 1 time_2013_me.csv | sort | uniq

3. Find total amount of reported hours

cut -d ';' -f 7 time_2013_me.csv | awk '{total = total + $1}END{print total}'

4. Find all hours on a specific project

grep <project name> time_2013_me.csv | cut -d ';' -f 7 | awk '{total = total + $1}END{print total}'

5. Find alle de andre end specifikt projekt:

grep -v <project name> time_2013_me.csv | cut -d ';' -f 7 | awk '{total = total + $1}END{print total}'

This tool is of course not compatible with the company hour registrations, so I better create a report by doing some cmdline stuff. Luckily, redmine can export timespend to a csv file this i what I did.

Once the file is ready the following cmdlines can be of assistance for analysing the timesheet data

1. Find All hours spend in 2013 and dump it to a new file

grep 2013 timelog.csv > time_2013_me.csv

2. Find all projects worked on in 2013

cut -d ';' -f 1 time_2013_me.csv | sort | uniq

3. Find total amount of reported hours

cut -d ';' -f 7 time_2013_me.csv | awk '{total = total + $1}END{print total}'

4. Find all hours on a specific project

grep <project name> time_2013_me.csv | cut -d ';' -f 7 | awk '{total = total + $1}END{print total}'

5. Find alle de andre end specifikt projekt:

grep -v <project name> time_2013_me.csv | cut -d ';' -f 7 | awk '{total = total + $1}END{print total}'

That's it and that's that.

December 12, 2013

Updating Linux mint ... Ubuntu style!

I have a friend who still hasen updated his machine from Linux mint 12 :O So I promised to create a guide on how this could be done. I simulated his system on a virtual machine.

Edit: This link contain a description of the recommended Mint update method using the backup tool. You should consider using this method, as the one I'm explaining in this post is potentially dangerous (no pain no gain!).

The following is a guide on how to create rolling updates on Linux mint. The trick used is to change the repository packages from one mint version to the other as explained here.

Edit: This link contain a description of the recommended Mint update method using the backup tool. You should consider using this method, as the one I'm explaining in this post is potentially dangerous (no pain no gain!).

The following is a guide on how to create rolling updates on Linux mint. The trick used is to change the repository packages from one mint version to the other as explained here.

Every mint distribution, and Ubuntu distribution for that matter, has a distribution name attached. Linux mint12 is called Lisa, mint13 is called Maya etc. All names for Linux mint versions can be found at: old releases and for Ubuntu at: releases

The dist-upgrade trick is to find your current Linux mint release, if you don’t know the name already, it can be found by issuing:

grep mint /etc/apt/sources.list

grep mint /etc/apt/sources.list

deb http://packages.linuxmint.com/ lisa main upstream import

The distribution name is preset at the first space after the URL. To get the Ubuntu name replace mint in the grep expression, the list is a bit longer, but the name is still present in the same place. The Ubuntu version is oneiric

You can follow this approach when upgrading all your mint installations, but you should only go one increment at a time.

You can follow this approach when upgrading all your mint installations, but you should only go one increment at a time.

The best approach for updating is to install the system you’d like to update in a virtual machine, and then apply the update to that system to see what actually happens. Using this approach may seem somewhat overkill, but it is likely to save you a lot of work when trying to fix your broken installation later.

Before you begin you should know that the mint-search-addon package cannot be resolved.

Before you begin you should know that the mint-search-addon package cannot be resolved.

sudo aptitude remove --purge mint-search-addon

If you do not have a log in manager, i.e. mdm you should install this and log out and back in to check that the new manager works flawlessly.

sudo aptitude install mdm

Installing and configuring mdm should ensure that you’re not logging into X using the start X script, as this will most likely break your x log in after the dist upgrade. The Ubuntu x script will be replacing the mint x script, leaving you at an old school log in shell.

sudo aptitude upgrade && sudo aptitude clean

sudo aptitude upgrade && sudo aptitude clean

Once the updates has run reboot to ensure that any unknown dependencies are set straight prior to the actual dist upgrade.

sudo reboot

sudo reboot

When the system is back in business it’s time to start the actual dist upgrade. We’ll be doing the upgrade Ubuntu style. Issue (Yes I know it a long command):

sudo cp /etc/apt/sources.list /etc/apt/sources.list.backup && sudo perl -p -i -e "s/lisa/maya/g" /etc/apt/sources.list && sudo perl -p -i -e "s/oneiric/precise/g" /etc/apt/sources.list && sudo apt-get update && sudo apt-get dist-upgrade && sudo apt-get autoremove && sudo apt-get autoclean

sudo cp /etc/apt/sources.list /etc/apt/sources.list.backup && sudo perl -p -i -e "s/lisa/maya/g" /etc/apt/sources.list && sudo perl -p -i -e "s/oneiric/precise/g" /etc/apt/sources.list && sudo apt-get update && sudo apt-get dist-upgrade && sudo apt-get autoremove && sudo apt-get autoclean

Notice that the dist upgrade is done using apt-get and not aptitude, this is recommended by Ubuntu, so I’ll use it here but that is the only place.

You should follow the dist upgrade closely, as there will be issues you’ll need to resolve during the dist upgrade. Issues with respect to your current machine’s configuration.

BEWARE: You should not blindly accept changes to your existing /etc/sudoers file! That is the rationale why -y has not been added to the dist-upgrade command. If you are in doubt select the package maintainers version, I usually do this on any update, your old version will be placed by the dpkg program so you’ll always be able to find your old configuration.

With the new packages installed, cross your fingers, hope for the best, and reboot.

sudo reboot

sudo reboot

If you get a black screen when you reboot your (virtual) machine, it is most likely due to the graphical support has failed. Try hitting ctrl+f1 to get a old school log in prompt. Log in, then do the upgrade in the terminal until there’s nothing left.

sudo aptitude upgrade

sudo aptitude upgrade

My upgrade stopped at the mint-search-addon package for Firefox, but you have removed this package so it should not cause any problems. If it does anyway run the remove command from above again.

Once all the upgrades have run you’re ready for starting X to get a feel of what the problems with going graphical may be. In my case it was a missing setting for the window manager, I had only lightmd installed which attempted to start an Ubuntu session :(

Once all the upgrades have run you’re ready for starting X to get a feel of what the problems with going graphical may be. In my case it was a missing setting for the window manager, I had only lightmd installed which attempted to start an Ubuntu session :(

Use the mdm window manager to get things up an running, if you get the missing file /usr/share/mdm/themes/linuxmint/linuxmint.xml dialog it means you’re missing the mint-mdm-themes package so issuing:

sudo aptitude install mdm mint-mdm-themes mint-backgrounds-maya mint-backgrounds-maya-extra

sudo aptitude install mdm mint-mdm-themes mint-backgrounds-maya mint-backgrounds-maya-extra

sudo service mdm start

Should bring you to a graphical log in prompt. All you have to do now is resolve the over dependencies that you may have.

My new kernel should not be reconfigured because a link was missing in grub. /usr/share/grub/grub-mkconfig_lib be either a symbolic link or a copy. Setting the symbolic link to /usr/lib/grub/grub-mkconfig_lib can be fixed by:

cd /usr/share/grub/

cd /usr/share/grub/

ln -s grub-mkconfig_lib /usr/lib/grub/grub-mkconfig_lib

then

dpkg --configure -a

dpkg --configure -a

Fixed the missing configurable parts for the new kernel dependencies. Now, the kernel image from the old Linux mint12 system must be removed, because it interrupts aptitude and dpkg. The old kernel is removed by:

sudo aptitude remove linux-image-3.0.0-32-generic

sudo aptitude remove linux-image-3.0.0-32-generic

Finally, reboot. Wait. Then, once the system is up again, you should be, you’ll need to activate the new repository sources, since dist-upgrade only sets packages to the distribution packages. It does not automatically choose the latest versions of those packages, issue:

sudo aptitude update

sudo aptitude update

sudo aptitude upgrade

Voila, you should be good to go. Some indies may not be hit, some packages may not be installed check the update status from aptitude update there’s a [-xx] counter in the bottom of the terminal, where - means there’s still stuff you could upgrade. Now, if you're up for it, you should try to update to Linux mint 14 (nadia) from this platform ;)

Labels:

aptitude,

Linux mint lisa,

linux mint maya,

mint update

December 10, 2013

Fortune cookies

Fortune cookies, the only computer cookies worth saving.

-- John Dideriksen

And there I was blogging about setting up a apt repository on you website, when suddenly, creating a fortune cookie package struck me. To use the fortunes you'll need fortunes installed, simply issue:

#sudo aptitude install fortunes

Fortune cookies? But how do you get those into your machine, and how do you handle the crumb issues?? Do you install a mouse?

That awkward moment when someone says something so stupid,

all you can do is stare in disbelief.

-- INTJ

Fortune cookies are deeply explained right here, too deeply some may say, but I like it. How you bake your own flavored cookies is a completely different question. But, the recipe is extremely simple. Once you have your test file ready, it should look like this, according to the ancient secret recipe.

Fortune cookies, the only computer cookies worth saving.

-- John Dideriksen

%

That awkward moment when someone says something so stupid,

all you can do is stare in disbelief.

-- INTJ

%

I've found him, I got Jesus in the trunk.

-- George Carlin

Now all you have to do is to install your fortunes using strfile issue:

#sudo cp quotes /usr/share/fortunes

#sudo strfile /usr/share/fortunes/quotes

And then

#fortune quotes

Should hit one of your freshly baked fortune cookies. Putting these freshly baked babies in a jar, in form of a Debian package, is just as easy. Simply create a Debian package following the recipe here.

November 29, 2013

Making files

Make files, phew!? Admitted I previously used the shell approach by adding mostly bash commands in the files I made. However, I somehow felt I had to change that, since making make files generic and not utilising the full potential of make if both hard and rather stupid.

I have a repository setup for Debian packages, these packages are of two types, some are simple configuration packages and others are Debian packages made from installation ready 3rd party tarballs. I aim at wrapping these 3rd part packages into Debian packages for easy usage for all my repository users.

The setup for creating the packages are a top level directory called packages, which contain the source for each of the individual packages. These could be Altera, a package that contain a setup for the Altera suite or Slickedit, a package containing the setup for Slickedit used in our users development environment.

The common rule set for all the individual package directories are:

1. Each directory must be named after the package i.e Slickedit package is in a directory called Slickedit

2. Each directory must contain a Makefile

3. Each directory must contain a readme.asciidoc file (documentation x3)

5. Each directory must contain a tar.gz file with the source package

4. Each directory must contain at least one source directory appended with -package

The above rules gives the following setup for the Slickedit package:

#tree -L 1 slickedit/

slickedit/

├── Makefile

├── readme.asciidoc

├── slickedit-package

└── slickedit.tar.gz

1 directory, 3 files

The package make file targets from the system that utilizes the package build routine are all, clean, distclean. all extract and builds the Debian package, clean removes the Debian package and distclean removes the extracted contents of the -package directory.

Furthermore, the make file contain three targets named Package, pack and unpack where package builds the debian package from the -package directory, pack creates a tarball for the -package directory in case there are changes to the package source, unpack extract the tarball into the -package directory.

Make file for the packages:

DEBCNTL = DEBIAN

TARFLAGS = -zvf

EXCLUDES = --exclude $(DEBCNTL)

# These are the variales used to setup the various targets

DIRS = $(subst /,,$(shell ls -d */))

PKGS = $(shell ls *.tar.gz)

CLEAN_DIRS:=$(subst package,package/*/,$(DIRS))

DEBS:= $(DIRS:%-package=%.deb)

TGZS:= $(DIRS:%-package=%.tar.gz)

# These are the targets provided by the build system

.PHONY: $(DIRS)

all: unpack package

package: $(DEBS)

pack: $(TGZS)

unpack:

find . -name '*.tar.gz' -type f -exec tar -x $(TARFLAGS) "{}" \;

clean:

rm -vf $(DEBS)

distclean: clean

ls -d $(CLEAN_DIRS) | grep -v $(DEBCNTL) | xargs rm -fvR

# These are the stem rules that set the interdependencies

# between the various output types

$(DEBS): %.deb: %-package

$(TGZS): %.tar.gz: %-package

# Stem targets for generating the various outputs

# These are the commands that generate the output files

# .deb, .tar.gz and -package/ directories

%.deb: %-package

fakeroot dpkg-deb --build $< $@

%.tar.gz: %-package

tar -c $(TARFLAGS) $@ $< $(EXCLUDES)

The major pit fall I had when creating the make file was figuring out the rules for the .tar.gz .deb and

-package rules. The first two are straight forward to create using the % modifier, but when creating the

-package target I ran in to a circular dependency. Because the pack and unpack rules have targets that when defined using the static pattern rule are contradictory.

%.tar.gz: %-package

and the opposite

%-package: %.tar.gz

Caused the unpack target to execute both the extract and create tarball targets, leading to the error of a Debian file containing only the Debian directory, not much package there. Since the aim was a generic make file, one to be used for all packages, I ended up using the find command to find and extract all tarballs. I figures this was the easiest approach since using the static pattern rules didn't work as intended.

I have a repository setup for Debian packages, these packages are of two types, some are simple configuration packages and others are Debian packages made from installation ready 3rd party tarballs. I aim at wrapping these 3rd part packages into Debian packages for easy usage for all my repository users.

The setup for creating the packages are a top level directory called packages, which contain the source for each of the individual packages. These could be Altera, a package that contain a setup for the Altera suite or Slickedit, a package containing the setup for Slickedit used in our users development environment.

The common rule set for all the individual package directories are:

1. Each directory must be named after the package i.e Slickedit package is in a directory called Slickedit

2. Each directory must contain a Makefile

3. Each directory must contain a readme.asciidoc file (documentation x3)

5. Each directory must contain a tar.gz file with the source package

4. Each directory must contain at least one source directory appended with -package

The above rules gives the following setup for the Slickedit package:

#tree -L 1 slickedit/

slickedit/

├── Makefile

├── readme.asciidoc

├── slickedit-package

└── slickedit.tar.gz

1 directory, 3 files

The package make file targets from the system that utilizes the package build routine are all, clean, distclean. all extract and builds the Debian package, clean removes the Debian package and distclean removes the extracted contents of the -package directory.

Furthermore, the make file contain three targets named Package, pack and unpack where package builds the debian package from the -package directory, pack creates a tarball for the -package directory in case there are changes to the package source, unpack extract the tarball into the -package directory.

Make file for the packages:

DEBCNTL = DEBIAN

TARFLAGS = -zvf

EXCLUDES = --exclude $(DEBCNTL)

# These are the variales used to setup the various targets

DIRS = $(subst /,,$(shell ls -d */))

PKGS = $(shell ls *.tar.gz)

CLEAN_DIRS:=$(subst package,package/*/,$(DIRS))

DEBS:= $(DIRS:%-package=%.deb)

TGZS:= $(DIRS:%-package=%.tar.gz)

# These are the targets provided by the build system

.PHONY: $(DIRS)

all: unpack package

package: $(DEBS)

pack: $(TGZS)

unpack:

find . -name '*.tar.gz' -type f -exec tar -x $(TARFLAGS) "{}" \;

clean:

rm -vf $(DEBS)

distclean: clean

ls -d $(CLEAN_DIRS) | grep -v $(DEBCNTL) | xargs rm -fvR

# These are the stem rules that set the interdependencies

# between the various output types

$(DEBS): %.deb: %-package

$(TGZS): %.tar.gz: %-package

# Stem targets for generating the various outputs

# These are the commands that generate the output files

# .deb, .tar.gz and -package/ directories

%.deb: %-package

fakeroot dpkg-deb --build $< $@

%.tar.gz: %-package

tar -c $(TARFLAGS) $@ $< $(EXCLUDES)

The major pit fall I had when creating the make file was figuring out the rules for the .tar.gz .deb and

-package rules. The first two are straight forward to create using the % modifier, but when creating the

-package target I ran in to a circular dependency. Because the pack and unpack rules have targets that when defined using the static pattern rule are contradictory.

%.tar.gz: %-package

and the opposite

%-package: %.tar.gz

Caused the unpack target to execute both the extract and create tarball targets, leading to the error of a Debian file containing only the Debian directory, not much package there. Since the aim was a generic make file, one to be used for all packages, I ended up using the find command to find and extract all tarballs. I figures this was the easiest approach since using the static pattern rules didn't work as intended.

Labels:

deb. packages,

Make,

Makefiles,

rerepro

November 15, 2013

Custom spotify playlists

The problem with spotify and iTunes etc is that there's really no good support for playlists. With playlists I mean importing say csv lists. Why would i need a list like this? Because, every now and then I'd like to just create a simple playlist containing the Artis Name and track number as ARTIST,TRACK repeat.

That's where ivyishere comes in, cool online tool that can export your csv, amongst other, playlists to spotify. Thanks.

How do I get a csv playlist, in this case I found the work of another House MD fan who did spend the time to organize all tracks from all shows on a website. I'd like that playlist without hving to add every single song by hand.

This i what I did.

1. copy the neatly ordered html table using you mouse, from top till bottom, selecting all the songs you like in your playlist.

2. open libreoffice calc and paste the songs there, select the html formatted table and wait.

3. Delete the rows you dont need, keeping only artist and track.

4. Copy the artist and track coloumns paste in a new document, then save this document as csv.

5. Fire up your shell and perl the shit outta a the csv.

cat housemd.playlist.csv # to see what you have to deal with

Figure out the reguar expressions you'll need, yes you can most likely find a more combined expression than I did, nevertheless my way worked ;)

perl -p -e "s/^,$//g" # Remove the lines containing just ,

perl -p -e "s/^\s$//g" # remove lines containing just whitespaces

perl -p -e "s/^'No.*,$//g" # remove th elines containing the No Commer ... text

perl -p -e "s/\"|\'//g" # remove the ' and " from all artists and songs

The final experssion for me was:

perl -p -e "s/^,$//g" housemd.playlist.csv | perl -p -e "s/^'No.*,$//g" | perl -p -e "s/^\s$//g" | perl -p -e "s/\"|\'//g" > housemd.playlist.2.csv

6. Cat your file to see if it is what you'd expect

7. Upload you file to ivyishere and wait.

Thanks perl and ivyishere.

That's where ivyishere comes in, cool online tool that can export your csv, amongst other, playlists to spotify. Thanks.

How do I get a csv playlist, in this case I found the work of another House MD fan who did spend the time to organize all tracks from all shows on a website. I'd like that playlist without hving to add every single song by hand.

This i what I did.

1. copy the neatly ordered html table using you mouse, from top till bottom, selecting all the songs you like in your playlist.

2. open libreoffice calc and paste the songs there, select the html formatted table and wait.

3. Delete the rows you dont need, keeping only artist and track.

4. Copy the artist and track coloumns paste in a new document, then save this document as csv.

5. Fire up your shell and perl the shit outta a the csv.

cat housemd.playlist.csv # to see what you have to deal with

Figure out the reguar expressions you'll need, yes you can most likely find a more combined expression than I did, nevertheless my way worked ;)

perl -p -e "s/^,$//g" # Remove the lines containing just ,

perl -p -e "s/^\s$//g" # remove lines containing just whitespaces

perl -p -e "s/^'No.*,$//g" # remove th elines containing the No Commer ... text

perl -p -e "s/\"|\'//g" # remove the ' and " from all artists and songs

The final experssion for me was:

perl -p -e "s/^,$//g" housemd.playlist.csv | perl -p -e "s/^'No.*,$//g" | perl -p -e "s/^\s$//g" | perl -p -e "s/\"|\'//g" > housemd.playlist.2.csv

6. Cat your file to see if it is what you'd expect

7. Upload you file to ivyishere and wait.

Thanks perl and ivyishere.

November 04, 2013

Asciidoc filters

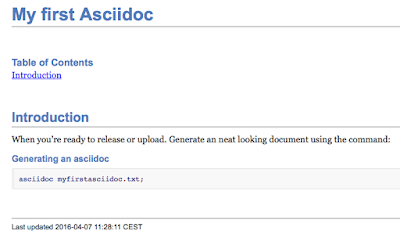

Using asciidoc? No?! How come? Because if you're writing technical documentation, blogging or books, this is one of the best programs available.

Asciidoc is installed using you distribution or systems package manager i.e for MacPorts on OSX:

$ sudo port install asciidoc

The asciidoc files for this short example can be found downloaded from here: my.first.asciidoc & filters.asciidoc.

Asciidoc is installed using you distribution or systems package manager i.e for MacPorts on OSX:

$ sudo port install asciidoc

Substitute port with apt-get, aptitude, yum or whatever the package manager on your system is. Now that asciidoc is installed, simply start writing your document, blog or book in a text file in your favorite editor. Open it and paste the following text.

= My first Asciidoc

:author: <your name>

:toc:

== Introduction

When you're ready to release or upload. Generate an neat looking document using the command:

.Generating an asciidoc

[source,sh]

asciidoc my.first.asciidoc;

To see the document that you have just created, simply follow the document's instructions and run the command, with your document name obviously.

$ asciidoc my.first.asciidoc

The following renders like this using the standart asciidoc theme:

To get started quickly with asciidoc Powerman has created cheat sheet, use this to see some of the things you can do. one of the things not included in the cheat sheet is the fact that asciidoc allows you to execute scripts on the command line.

This is extremely useful when writing technical documentation where you'll need to include information like realtime directory contents information or reporting logged in users directly in your document.

Adding a shell command ensures that the command is run when the document is being generated, every time the document is generated. Add the following to your document.

.Listing asciidoc's present in the current directory

[source,sh]

----

sys::[ls *.asciidoc]

----

Now, instead of rendering the actual command, asciidoc executes the command and renders the result. In this case the normal output of the /ls *.asciidoc/ command.

As you can see I have 2 asciidoc files in my current directory. If I wanted to I could include the filter.asciidoc file in the one I'm currently writing. Allowing the file filters.asciidoc to be included. Add the following text to your asciidoc file:

Adding the next document after this line

:leveloffset: 1

include::filters.asciidoc[]

:leveloffset: 0

And were back in the original document.

The include::<filename>[] statement is where the magic happens. This is the line that includes the filter.asciidoc file. The included file doesn't have to be an asciidoc document, any file can be used.

The :leveloffset:1 is needed for asciidoc to treat the included document as headers in its header level. After the include statement we simply pop the header level bask with :leveloffset:0 And were back in the original document.

Notice how the included document is present with in the Adding ... and were back in text. A very useful feature when you have more than one person working on documentation. As this avoids numbers of document merges when you're working on separate files in a team.

Did you notice the cool graphics that was included in the document? This graphic is rendered by an asciidoc plugin called Ditaa. There are several plugins available for asciidoc, some these can be found on the asciidoc plugin page.

Each of the plugins have installation instructions and usage information included. Here's an example of the ditaa plugin. First download the ditaa.zip file. Then install it to your local users ~/.asciidoc directory.

$ mv Downloads/asciidoc-ditaa-filter-master.zip Downloads/ditaa-filter-master.zip

$ asciidoc --filter install Downloads/ditaa-filter-master.zip

The ditaa plugin is the one rendering the image displayed in the my.first.asciidoc. Here's how it's done. Create a new file called filter.asciidoc and fill it with these contents.

= Asciidoc filters exmple

:author: John Dideriksen

:toc:

== testing various filters on the MacPort edition

The following document is used to test some of the asciidoc plugins for drawing, all examples have been taken from the authors plugin documentation page.

=== Ditaa

["ditaa"]

---------------------------------------------------------------------

+--------+ +-------+ +-------+

| | --+ ditaa +--> | |

| Text | +-------+ |diagram|

|Document| |!magic!| | |

| {d}| | | | |

+---+----+ +-------+ +-------+

: ^

| Lots of work |

+-------------------------+

---------------------------------------------------------------------

stops here

As you can see ditaa renders the graphic from an ascii image. This is really useful since you do not have to worry about opening a new program and maintain a separate drawing.

If your documentation is under source control you can easily track the changes in the diagrams for the documentation, just like any other changes. Ditaa is just one of many filters you can install in asciidoc, aafigure is another example of a filter.

You can list your installed asciidoc filters using the command:

$ asciidoc --filter list

/opt/local/etc/asciidoc/filters/code

/opt/local/etc/asciidoc/filters/graphviz

/opt/local/etc/asciidoc/filters/latex

/opt/local/etc/asciidoc/filters/music

/opt/local/etc/asciidoc/filters/source

/Users/cannabissen/.asciidoc/filters/ditaa

Notice, that asciidoc comes with a set of preinstalled plugins that you can use at your will. You remove an installed filter with the command:

$asciidoc --filter remove ditaa

June 25, 2013

Viewing your protocol in Wireshark and playing with libpcap

Writing network code eh? At times I am, and for this particular

network stuff I needed a protocol dissector for wireshark. As one of

these makes it that much easier to verify that you're sending the

correct stuff on your wire.

First off, you'll most likely need to modify the wireshark installation to allow specific users to run the tool. This setup will also avoid that you'll be running wireshark as root. [README]

# sudo dpkg-reconfigure wireshark-common

Answer yes to the allow user to capture intefaces. Next, part is that you'll need to add the use(s) to the wireshark group to allow em to use the sniffer tool!

# sudo usermod -a -G wireshark $USER

Of course the $USER will add root! (sudo at the beginning) so you'll have to replace $USER with your user name, or pipe it to the experssion.

Finally, for your group changes to take effect you'll need to log in and out of gnome :O I know, it sucks etc. but that what you'll have to do!

Editorsnote: You can use this neat trick to force logout after package installation in the scripts Neato!

# gnome-session-quit --logout --no-prompt

Onwards to the protocol stuff: [source]

Open your editor and create a simple lua dissector [source].

Now you'll need libpcap to send some data over the wire. I prefer libpcap as most of this code will be portable to windows using winpcap. This way you won't need to use a strategy pattern for teh socket stuff. As the libpcap/winpcap servers as this pattern.

First off, you'll most likely need to modify the wireshark installation to allow specific users to run the tool. This setup will also avoid that you'll be running wireshark as root. [README]

# sudo dpkg-reconfigure wireshark-common

Answer yes to the allow user to capture intefaces. Next, part is that you'll need to add the use(s) to the wireshark group to allow em to use the sniffer tool!

# sudo usermod -a -G wireshark $USER

Of course the $USER will add root! (sudo at the beginning) so you'll have to replace $USER with your user name, or pipe it to the experssion.

Finally, for your group changes to take effect you'll need to log in and out of gnome :O I know, it sucks etc. but that what you'll have to do!

Editorsnote: You can use this neat trick to force logout after package installation in the scripts Neato!

# gnome-session-quit --logout --no-prompt

Onwards to the protocol stuff: [source]

Open your editor and create a simple lua dissector [source].

Now you'll need libpcap to send some data over the wire. I prefer libpcap as most of this code will be portable to windows using winpcap. This way you won't need to use a strategy pattern for teh socket stuff. As the libpcap/winpcap servers as this pattern.

June 18, 2013

Some mint updates

First of all you'll need netflix ;) Just like I did and fianlly someone created a package. Secondly I needed the latest key for the spotify linux package. Issue:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 94558F59

sudo aptitude update

and you should have your sources running with out the expeired spotify key.

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 94558F59

sudo aptitude update

and you should have your sources running with out the expeired spotify key.

April 29, 2013

Painting in mint 14

No need since David did all the work ;)

April 25, 2013

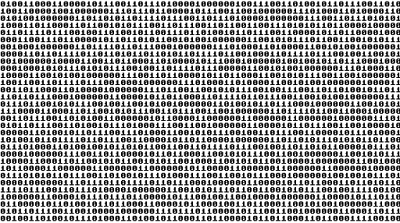

Creating a binary overlay image with the gimp

I saw a cool image at the Last Ninja Archives a couple of weeks ago. I got so inspired that I had to try to do something similar. First I needed a binary text file, there is an online ASCII to binary converter. So you don't have to write one on your own.

Looking at the Last Ninja Archive kinda brought me back in time. So I decided to get a Commodore 64 font. To install the font on OSX use the font book tool. In LinuxMint (or Ubuntu) run the command:

# gksudo "nautilus"

Then, click the install font button in the top right corner of nautilus. This should add the font to all the needed places. Here is some additional help for installing fonts in linux and some for adding fonts to the gimp windows version. (I found that I also needed to reboot the window$ machine to see the font (what a surprice!! :D))

The look I'm interested in is an overlay of transparent binary numbers allowing you to see The last Ninja through the digits. Also, the image should look like it is running on an old monitor, filled with scan lines.

To create the image you'll need to know how to use layers, the color select and fill tool. Before you start take some time to tune in on slayradio and then fire up the Gimp and paint away.

The number of character rows are: image height/font size. Which adds up to 312px/10px = 31 rows.

which is characters * rows = 55 * 31 = 1705 characters.

That means you'll need to convert at least num charecters/binary encoding, which is 8bits. Thats: 1705/8 ≈ 214 characters all in all. Then you'll need a few more just to align the binary text nicely with in the image.

If you like, you can now invert the selection and cut only the zeros and ones containing the parts of the original image. This can be pastes into a new image with transparent background, to create an image that will work on other than black background. Remember black ninja's are the coolest.

Here's an image based on the original C= graphics, screenshot from vice.

Looking at the Last Ninja Archive kinda brought me back in time. So I decided to get a Commodore 64 font. To install the font on OSX use the font book tool. In LinuxMint (or Ubuntu) run the command:

# gksudo "nautilus

Then, click the install font button in the top right corner of nautilus. This should add the font to all the needed places. Here is some additional help for installing fonts in linux and some for adding fonts to the gimp windows version. (I found that I also needed to reboot the window$ machine to see the font (what a surprice!! :D))

The look I'm interested in is an overlay of transparent binary numbers allowing you to see The last Ninja through the digits. Also, the image should look like it is running on an old monitor, filled with scan lines.

To create the image you'll need to know how to use layers, the color select and fill tool. Before you start take some time to tune in on slayradio and then fire up the Gimp and paint away.

First, create the video overlay

- Open the source image.

- Press 'command' d to duplicate the image.

- Select: filters | distorts | video.

- Keep the default settings in the video dialog, perfect old scanline look.

- Press ok and see the result.

- Save the image so you dont loose it.

Second, create the binary text

- Goto ASCII2BinaryConverter.

- Paste a portion of ASCII encoded text and press convert.

- Copy the converted binary text.

The number of character rows are: image height/font size. Which adds up to 312px/10px = 31 rows.

which is characters * rows = 55 * 31 = 1705 characters.

That means you'll need to convert at least num charecters/binary encoding, which is 8bits. Thats: 1705/8 ≈ 214 characters all in all. Then you'll need a few more just to align the binary text nicely with in the image.

Third, creating the binary text

- Create a new image in the same size as the source image with white background color.

- Select the text tool and cover the whole image area.

- Choose: Commodore font size 10, un-tick anti aliasing, and paste all your binary text.

- Color select the binary text, using the color selector tool, un-tick no feather.

- Copy the selection from your binary text image.

- Paste as new layer on the white image.

Fourth, create the binary mask

- Select color the pasted text using color select. (if it is not selected after paste).

- Merge layer down.

- Invert selection.

- Select: menu item edit and fill with foreground color.

- Invert selection.

- Cut the letters to get a black drawing with transparent characters.

- Select none.

- Save the binary mask image.

Fifth, we'll add the binary mask to the scan line image

- Open the scan line image.

- Open the binary mask image.

- Color select the black part of the binary mask.

- Cut the mask.

- Paste as new layer on the scan line image.

- Save your image.

If you like, you can now invert the selection and cut only the zeros and ones containing the parts of the original image. This can be pastes into a new image with transparent background, to create an image that will work on other than black background. Remember black ninja's are the coolest.

Here's an image based on the original C= graphics, screenshot from vice.

April 22, 2013

Its a kind of Magic ... Imagemagick

Spring is here, which means its time to dust off the old website.

First you should update your Firefox browser with some small cool web design utilities: Firefox webdeveloper addons And then, you need to install imagemagick. This is the coolest image tool I have seen so far!

# sudo aptitude install imagemagick

And then you should have close look at all the cool scripts that Fred has already done on your behalf. Use Fred's Imagemagick scripts.

I have a bunch of social network sites that I'd like my main web site to link to. The construction is already at my site. But, I have no neat icons :(

I looked at the some different ones, fund the ones I (for some reason liked) and decided to do a simple glow effect to the icon. Fred's got a script just for this called glow.

Download the glow script and place it in your ~/bin folder if you don't already have a personalised bin folder for all your crazy stuff create one.

# mkdir ~/bin

Update your .bashrc to set the path, add the line: PATH=~/bin:$PATH using your favourite editor or:

# perl -p -i -e "s|^(PATH).*$|$1=~/bin:$PATH|g" ~/.bashrc

you're ready to go, test by issuing a

# glow

This should give you the glow scripts usage text. Once that is setup you can use this simple script or create your own.

add_glow (source)

#!/bin/sh

USAGE="$0 [source_dir] [dest_dir] all images from source will be substituded to <imagename>.glow.<imageextention>"

IMG_SRC_DIR=$1

IMG_DEST_DIR=$2

if [ -n "$IMG_SRC_DIR" -a -d "$IMG_SRC_DIR" ]; then

if [ -n "$IMG_SRC_DIR" -a -d "$IMG_DEST_DIR" ]; then

for IMG in $(ls $IMG_SRC_DIR); do

DEST_IMG=$(basename $IMG)

echo "prosessing $IMG"

glow -a 1.7 -s 16 $IMG $IMG_DEST_DIR/${DEST_IMG%%.png}.glow.png

done

exit 0

else

echo "No dest directory"

fi

else

echo "No source directory"

fi

echo "$USAGE"

exit 1

place that script in the ~/bin folder as well not you can create a folder for the source images and one for the destination images, and tun the add_glow script

# mkdir ~/glow_pngs

Assuming your source images are in ~/normal_pngs

# add_glow ~/normal_pngs ~/glow_pngs

Voila, now you have glow effect on all your source images.

Next off you'll have to use them on your web page. Here's a quick img tag modification that can change image when the mouse is over the image, and back when the mouse leaves the image.

<img src="icontexto-inside-xfit.png" onmouseover="this.src='icontexto-inside-xfit.glow.png'" onmouseout="this.src='icontexto-inside-xfit.png'" alt="Xfit" width="32" height="32"/>

You can check out the result @www.dideriksen.org

First you should update your Firefox browser with some small cool web design utilities: Firefox webdeveloper addons And then, you need to install imagemagick. This is the coolest image tool I have seen so far!

# sudo aptitude install imagemagick

And then you should have close look at all the cool scripts that Fred has already done on your behalf. Use Fred's Imagemagick scripts.

I have a bunch of social network sites that I'd like my main web site to link to. The construction is already at my site. But, I have no neat icons :(

I looked at the some different ones, fund the ones I (for some reason liked) and decided to do a simple glow effect to the icon. Fred's got a script just for this called glow.

Download the glow script and place it in your ~/bin folder if you don't already have a personalised bin folder for all your crazy stuff create one.

# mkdir ~/bin

Update your .bashrc to set the path, add the line: PATH=~/bin:$PATH using your favourite editor or:

# perl -p -i -e "s|^(PATH).*$|$1=~/bin:$PATH|g" ~/.bashrc

you're ready to go, test by issuing a

# glow

This should give you the glow scripts usage text. Once that is setup you can use this simple script or create your own.

add_glow (source)

#!/bin/sh

USAGE="$0 [source_dir] [dest_dir] all images from source will be substituded to <imagename>.glow.<imageextention>"

IMG_SRC_DIR=$1

IMG_DEST_DIR=$2

if [ -n "$IMG_SRC_DIR" -a -d "$IMG_SRC_DIR" ]; then

if [ -n "$IMG_SRC_DIR" -a -d "$IMG_DEST_DIR" ]; then

for IMG in $(ls $IMG_SRC_DIR); do

DEST_IMG=$(basename $IMG)

echo "prosessing $IMG"

glow -a 1.7 -s 16 $IMG $IMG_DEST_DIR/${DEST_IMG%%.png}.glow.png

done

exit 0

else

echo "No dest directory"

fi

else

echo "No source directory"

fi

echo "$USAGE"

exit 1

place that script in the ~/bin folder as well not you can create a folder for the source images and one for the destination images, and tun the add_glow script

# mkdir ~/glow_pngs

Assuming your source images are in ~/normal_pngs

# add_glow ~/normal_pngs ~/glow_pngs

Voila, now you have glow effect on all your source images.

Next off you'll have to use them on your web page. Here's a quick img tag modification that can change image when the mouse is over the image, and back when the mouse leaves the image.

<img src="icontexto-inside-xfit.png" onmouseover="this.src='icontexto-inside-xfit.glow.png'" onmouseout="this.src='icontexto-inside-xfit.png'" alt="Xfit" width="32" height="32"/>

You can check out the result @www.dideriksen.org

Counting a files

This'll be the third time I'm listing something about find. This time I'm using it to count the number of files in a directory. At work I needed to know if a specific folder would break one of our tools because it might have too many files. (Long story)

# find YOURDIR -type f ¦ wc -l

Its the wc that does the actual counting by adding up each line from find.

# find YOURDIR -type f ¦ wc -l

Its the wc that does the actual counting by adding up each line from find.

April 16, 2013

sudo echo??? Hey isn't that an 80 band?

If you ever need to echo stuff into a file, and I'll bet you do! tee's the answer. Here's a snissle:

#echo "Acquire::http::Proxy \"$http_proxy\";" | sudo tee -a /etc/apt/apt.conf.d/70debconf

Updates the aptitude settings with the proxy server from your environment. Oh and removing it afterwards is done with:

#sudo perl -p -i -e "s/^Acquire.*$//g" /etc/apt/apt.conf.d/70debconf

Have fun ;)

#echo "Acquire::http::Proxy \"$http_proxy\";" | sudo tee -a /etc/apt/apt.conf.d/70debconf

Updates the aptitude settings with the proxy server from your environment. Oh and removing it afterwards is done with:

#sudo perl -p -i -e "s/^Acquire.*$//g" /etc/apt/apt.conf.d/70debconf

Have fun ;)

April 12, 2013

C++ posix thread wrapping

Here's a short story on using posix threads and getting them into c++ objects if that's your preferred language. Tutorial link to the C version. Threads info.

The following source is my two cents on C++ posix threads. There are two files: thread.h and thread.cpp. They create an inheritable thread class that you can use to implement your specialised threads.

Now if you use the code, remember you'll have to link it against the pthread library (libpthread) do this by adding option -lpthread to your compiler (or in your Makefile| Makefile.am).

Here's some implementation details on how the thread code can be used. It's available in the incoming_connection.h & incoming_connection.cpp files. But you can't expect to build these directly as there are some files missing.

These files are just for showing the intent of the thread implementation. Have fun! And yes you should sometimes just read code, it's like reading a good book!

The following source is my two cents on C++ posix threads. There are two files: thread.h and thread.cpp. They create an inheritable thread class that you can use to implement your specialised threads.

Now if you use the code, remember you'll have to link it against the pthread library (libpthread) do this by adding option -lpthread to your compiler (or in your Makefile| Makefile.am).

Here's some implementation details on how the thread code can be used. It's available in the incoming_connection.h & incoming_connection.cpp files. But you can't expect to build these directly as there are some files missing.

These files are just for showing the intent of the thread implementation. Have fun! And yes you should sometimes just read code, it's like reading a good book!

April 05, 2013

Setting up a debian repository with reprepro

So you're rolling your own?! Let's distribute em to the masses then, you know they want them! I'm using reprepro for distribution, installing is straight forward just check the guide in the link.

The repository I have is located remotely on a server machine, where I can only ssh and scp packages to either my home, or the repository folder. This setup has some drawbacks when scripting for repository update compared to a local database.

When working using ssh and tools like this you should setup ssh_agent as it will be really really help full. So, unless you get off on typing your password to the remote machine all the time, configure ssh agent.

The repository database is present on the repository_server in the normal www directory. /var/www/repository. This is the directory you should use when setting up the repository. The repository user have only write access to the users home folder and the repository server.

repository_user@repository_server:/home/repository_user & repository_user@repository_server:/var/www/repository

The setup for including packages to the remote repository, is that the binary packages must first be uploaded to the repository server and secondly included in the repository. The upload part is done on your local machine and the inclusion in done remotely.

The first copy part is straight forward using scp

scp package repository_user@repository_server:/home/repository_user

The second part with the package inclusion must be run on the repository_server, you do this by directing the script to the ssh connection:

The following listing is the script that I use to maintain my repository

#!/bin/bash

function die {

echo $1;

echo;

exit 1;

}

package=$1;

repro_dir=$2

user=<repository_user>

host=<repository_server>

ext=deb;

backup_dir=old_packages;

home_dir=/home/$user;

test -z "$package" && test ! -f "$package" && die "No package!";

scp $package $user@$host:$home_dir;

ssh $user@$host << ENDSSH;

test ! -f $package && echo "No package!" && exit 1;

test ! -d $repro_dir && echo "No repository dir!" && exit 1;

test ! -d $backup_dir && mkdir -p $backup_dir;

find $repro_dir -name "${package%.$ext}*.$ext" -exec cp -vf {} $home_dir/$backup_dir \;

md5sum ~/$package > /dev/null;

cd $repro_dir;

reprepro includedeb mk3 ~/$package;

ENDSSH

The repository I have is located remotely on a server machine, where I can only ssh and scp packages to either my home, or the repository folder. This setup has some drawbacks when scripting for repository update compared to a local database.

When working using ssh and tools like this you should setup ssh_agent as it will be really really help full. So, unless you get off on typing your password to the remote machine all the time, configure ssh agent.

The repository database is present on the repository_server in the normal www directory. /var/www/repository. This is the directory you should use when setting up the repository. The repository user have only write access to the users home folder and the repository server.

repository_user@repository_server:/home/repository_user & repository_user@repository_server:/var/www/repository

The setup for including packages to the remote repository, is that the binary packages must first be uploaded to the repository server and secondly included in the repository. The upload part is done on your local machine and the inclusion in done remotely.

The first copy part is straight forward using scp

scp package repository_user@repository_server:/home/repository_user

The second part with the package inclusion must be run on the repository_server, you do this by directing the script to the ssh connection:

ssh repository_user@repository_server << ENDSSH

<your script>

ENDSSH

The following listing is the script that I use to maintain my repository

#!/bin/bash

function die {

echo $1;

echo;

exit 1;

}

package=$1;

repro_dir=$2

user=<repository_user>

host=<repository_server>

ext=deb;

backup_dir=old_packages;

home_dir=/home/$user;

test -z "$package" && test ! -f "$package" && die "No package!";

scp $package $user@$host:$home_dir;

ssh $user@$host << ENDSSH;

test ! -f $package && echo "No package!" && exit 1;

test ! -d $repro_dir && echo "No repository dir!" && exit 1;

test ! -d $backup_dir && mkdir -p $backup_dir;

find $repro_dir -name "${package%.$ext}*.$ext" -exec cp -vf {} $home_dir/$backup_dir \;

md5sum ~/$package > /dev/null;

cd $repro_dir;

reprepro includedeb mk3 ~/$package;

ENDSSH

Labels:

debian repository,

reprepro,

ssh-agent

April 04, 2013

Company proxy configuration

If you're running your Linux box @work you most likely have the issue of using the company proxy server. I know I do :'O With the current Ubuntu / Linuxmint there's still a couple of places you'll need to configure before you have your proxy settings up and running.

The worst part is when you have to update your windows password. Cause you'll have to update all the configuration files on your box to be able to connect to the proxy with your new password. And a password change happens quite often these days. Here's a small note on the strategy: proxy to get your theory on the same page.

I installed cntml, a small local proxy tool that can handle the proxy connection stuff for you windows servers. cntml worked better for me, that's the only reason for not running ntml!

#sudo aptitude install cntml

Then, you'll have to configure the buggar, cntml have a great guide for this, so follow that one, and then you'll have to setup you browser and command line utilities and most likely your apt-conf as well.

As an system update can be run through pkexec and gksudo, which is what you do when you update using the GUI tools for updating. You'll have to setup the proxy server for the apt tool. This is because at writing pkexec and gksudo does not carry the cmd line proxy settings, which will cause both of these tools to fail the update.

First, You'll have to add a file to your apt.conf.d with the proxy, I just created a file called 98proxy in the /etc/apt/apt.conf.d/ directory, containing the cntlm proxy settings.

# echo 'Acquire::http::Proxy "http://localhost:3128";' | sudo tee /etc/apt/apt.conf.d/98proxy > /dev/null

Or edit the file by using you favorite editor.

Second, you'll have to setup your command line proxy, either locally via ~/.bashrc or as I did for the machine with a proxy.sh script in the /etc/profile.d/ dir You can copy the following to either you ~/.bashrc file or create a proxy script file. i.e.

# sudo touch /etc/profile.d/proxy

# sudo nano /etc/profile.d/proxy

Then copy and paste this:

#!/bin/bash

export http_proxy="http://localhost:3128"

export http_proxy="https://localhost:3128"

export http_proxy="ftp://localhost:3128"

export no_proxy="localhost,<your domain>"

You'll also have to edit the sudoers file to get your environment kept, you you ever need to run stuff as root!

# sudo nano /etc/sudoers

Paste the following to keep your settings (The Display is not for the proxy but you might like to haveroot windows displayed on your gui aswell)

Defaults env_keep = "DISPLAY"

Defaults env_keep += "proxy"

Defaults env_keep += "http_proxy"

Defaults env_keep += "https_proxy"

Defaults env_keep += "ftp_proxy"

Defaults env_keep += "no_proxy"

Third, you'll need to use the proxy in all you browser, spotify etc. Remember to set the no_proxy stuff aswell, as you may have internal domain look up issues. Change firefoxes settings via the gui, edit|preferences.

I rolled a small company-proxy-settings package containing the changes I mention here, just use it, on your own account. The package has an additional script that you may want. It is called cntml_config and you can run it every time you'll have to change your company password!

Script listing:

# sudo touch /usr/bin/cntlm_config

# sudo nano /usr/bin/cntlm_config

Paste the contents:

#!/bin/bash

config=/etc/cntlm.conf

domain=<your domain>

sudo service cntlm stop

echo -n "Enter your username for windows: ";

read user;

unset pass;

prompt="Enter password for windows: "

while IFS= read -p "$prompt" -r -s -n 1 char

do

if [[ $char == $'\0' ]]

then

break

fi

prompt='*'

pass+="$char"

done

echo "";

sudo perl -p -i -e "s|(Username\t).*$|Username\t$user|g" $config;

sudo perl -p -i -e "s|(Password\t).*$|Password\t$pass|g" $config;

sudo perl -p -i -e "s|(Domain\t).*$|Domain\t\t$domain|g" $config;

sudo perl -p -i -e "s/10.0.0.41:8080/<your proxy>/g" $config;

sudo perl -p -i -e "s/Proxy.*10[.]0[.]0[.]42:8080//g" $config;

sudo chmod 0600 $config;

sudo service cntlm start

replace the <your domain> and <your proxy> in the script with your company settings and you should be good to go. Modify and use at your own risk!

The worst part is when you have to update your windows password. Cause you'll have to update all the configuration files on your box to be able to connect to the proxy with your new password. And a password change happens quite often these days. Here's a small note on the strategy: proxy to get your theory on the same page.

I installed cntml, a small local proxy tool that can handle the proxy connection stuff for you windows servers. cntml worked better for me, that's the only reason for not running ntml!

#sudo aptitude install cntml

Then, you'll have to configure the buggar, cntml have a great guide for this, so follow that one, and then you'll have to setup you browser and command line utilities and most likely your apt-conf as well.

As an system update can be run through pkexec and gksudo, which is what you do when you update using the GUI tools for updating. You'll have to setup the proxy server for the apt tool. This is because at writing pkexec and gksudo does not carry the cmd line proxy settings, which will cause both of these tools to fail the update.

First, You'll have to add a file to your apt.conf.d with the proxy, I just created a file called 98proxy in the /etc/apt/apt.conf.d/ directory, containing the cntlm proxy settings.

# echo 'Acquire::http::Proxy "http://localhost:3128";' | sudo tee /etc/apt/apt.conf.d/98proxy > /dev/null

Or edit the file by using you favorite editor.

Second, you'll have to setup your command line proxy, either locally via ~/.bashrc or as I did for the machine with a proxy.sh script in the /etc/profile.d/ dir You can copy the following to either you ~/.bashrc file or create a proxy script file. i.e.

# sudo touch /etc/profile.d/proxy

# sudo nano /etc/profile.d/proxy

Then copy and paste this:

#!/bin/bash

export http_proxy="http://localhost:3128"

export http_proxy="https://localhost:3128"

export http_proxy="ftp://localhost:3128"

export no_proxy="localhost,<your domain>"

You'll also have to edit the sudoers file to get your environment kept, you you ever need to run stuff as root!

# sudo nano /etc/sudoers

Paste the following to keep your settings (The Display is not for the proxy but you might like to haveroot windows displayed on your gui aswell)

Defaults env_keep = "DISPLAY"

Defaults env_keep += "proxy"

Defaults env_keep += "http_proxy"

Defaults env_keep += "https_proxy"

Defaults env_keep += "ftp_proxy"

Defaults env_keep += "no_proxy"

Third, you'll need to use the proxy in all you browser, spotify etc. Remember to set the no_proxy stuff aswell, as you may have internal domain look up issues. Change firefoxes settings via the gui, edit|preferences.

I rolled a small company-proxy-settings package containing the changes I mention here, just use it, on your own account. The package has an additional script that you may want. It is called cntml_config and you can run it every time you'll have to change your company password!

Script listing:

# sudo touch /usr/bin/cntlm_config

# sudo nano /usr/bin/cntlm_config

Paste the contents:

#!/bin/bash

config=/etc/cntlm.conf

domain=<your domain>

sudo service cntlm stop

echo -n "Enter your username for windows: ";

read user;

unset pass;

prompt="Enter password for windows: "

while IFS= read -p "$prompt" -r -s -n 1 char

do

if [[ $char == $'\0' ]]

then

break

fi

prompt='*'

pass+="$char"

done

echo "";

sudo perl -p -i -e "s|(Username\t).*$|Username\t$user|g" $config;

sudo perl -p -i -e "s|(Password\t).*$|Password\t$pass|g" $config;

sudo perl -p -i -e "s|(Domain\t).*$|Domain\t\t$domain|g" $config;

sudo perl -p -i -e "s/10.0.0.41:8080/<your proxy>/g" $config;

sudo perl -p -i -e "s/Proxy.*10[.]0[.]0[.]42:8080//g" $config;

sudo chmod 0600 $config;

sudo service cntlm start

replace the <your domain> and <your proxy> in the script with your company settings and you should be good to go. Modify and use at your own risk!

Labels:

cntml,

linuxmint proxy configuration,

windows proxy

March 26, 2013

Remote connections

You know the drill! You need to fix a thing at work, a 5 minute thing, but you box was never setup to handle remote connections properly. So fix it, you know you'll need it in two shakes of a lambs tale!

There's a couple of possibilities, VNC (slower than smoking death!) ssh, and some that run on the remote desktop protocol like XRDP.

# sudo aptitude install openssh-server

Then you'll have to allow your remote machine to connect to your box:

# sudo emacs /etc/ssh/sshd_config

Remove the # comment sign from: ForwardX11 no. Then change no to yes so it looks like this:

ForwardX11 yes

Save and exit. Next you'll have to add you remote machine name to the xhost by:

#xhost +<your ip or machine.domain>

Next you'll have to check that the .Xauthority file in your home folder is owned by your user (mine wasent?)

# ls -l ~/.Xauthority

-rw------- 1 user user 181 Mar 25 13:35 .Xauthority

If not you should chown it ro your user:

# sudo chown user ~/.Xauthority

You'll now be able to remote connect to your box from any machine with a running x server. Simply start the XServer on your client machine, login via ssh and run the program. In putty you'll have to forward X11 packages, there's a setting called just that.

# sudo aptitude install tightvncserver

or

# sudo aptitude install x11vnc

The difference is that tightvncserver will serve a new session. x11vnc will allow access to an existing session. That may be what you'll need if you have a running session on your work box. If you use x11vnc you might want to set a password.

#sudo aptitude install xrdp

You connect to it by using the Windows remote desktop connection utility. You'll get a new screen, so this utility can't steal your current x session.

There's a couple of possibilities, VNC (slower than smoking death!) ssh, and some that run on the remote desktop protocol like XRDP.

Putty & XMing

If you're a Winer, you'll need putty for ssh'ing and a X server, i use XMing. And you'll need to prepare your box if you run Linuxmint Desktop edition.# sudo aptitude install openssh-server

Then you'll have to allow your remote machine to connect to your box:

# sudo emacs /etc/ssh/sshd_config

Remove the # comment sign from: ForwardX11 no. Then change no to yes so it looks like this:

ForwardX11 yes

Save and exit. Next you'll have to add you remote machine name to the xhost by:

#xhost +<your ip or machine.domain>

Next you'll have to check that the .Xauthority file in your home folder is owned by your user (mine wasent?)

# ls -l ~/.Xauthority

-rw------- 1 user user 181 Mar 25 13:35 .Xauthority

If not you should chown it ro your user:

# sudo chown user ~/.Xauthority

You'll now be able to remote connect to your box from any machine with a running x server. Simply start the XServer on your client machine, login via ssh and run the program. In putty you'll have to forward X11 packages, there's a setting called just that.

VNC

To use VNC you'll have to install a VNC server on your box, i.e or X11vnc# sudo aptitude install tightvncserver

or

# sudo aptitude install x11vnc

The difference is that tightvncserver will serve a new session. x11vnc will allow access to an existing session. That may be what you'll need if you have a running session on your work box. If you use x11vnc you might want to set a password.

XRDP

Install XRDP and run the deamon.#sudo aptitude install xrdp

You connect to it by using the Windows remote desktop connection utility. You'll get a new screen, so this utility can't steal your current x session.

Labels:

Linux,

Putty,

Remote desktop,

XMing,

XRDP

Subscribe to:

Posts (Atom)